Impressions & Insights from Machine Learning Week Europe

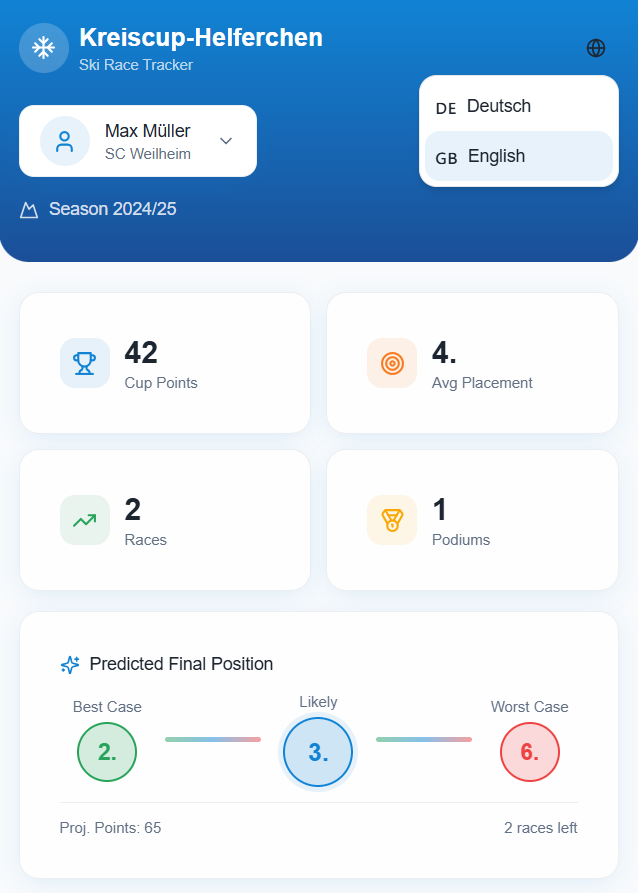

Winter is coming… at least here at our home in Weilheim in Upper Bavaria. The day after the Machine Learning Week Europe ended, we had our first snowfall. Life has frozen over – at least outside. On LinkedIn, however, discussion is heating up: is the AI bubble bursting, and is the next AI winter coming?

I’m taking advantage of the winter calm to share with you my impressions and insights from Machine Learning Week Europe, which I had the privilege of moderating on Monday and Tuesday last week in Berlin. There, too, the question was asked frequently: (when) will the AI bubble burst?

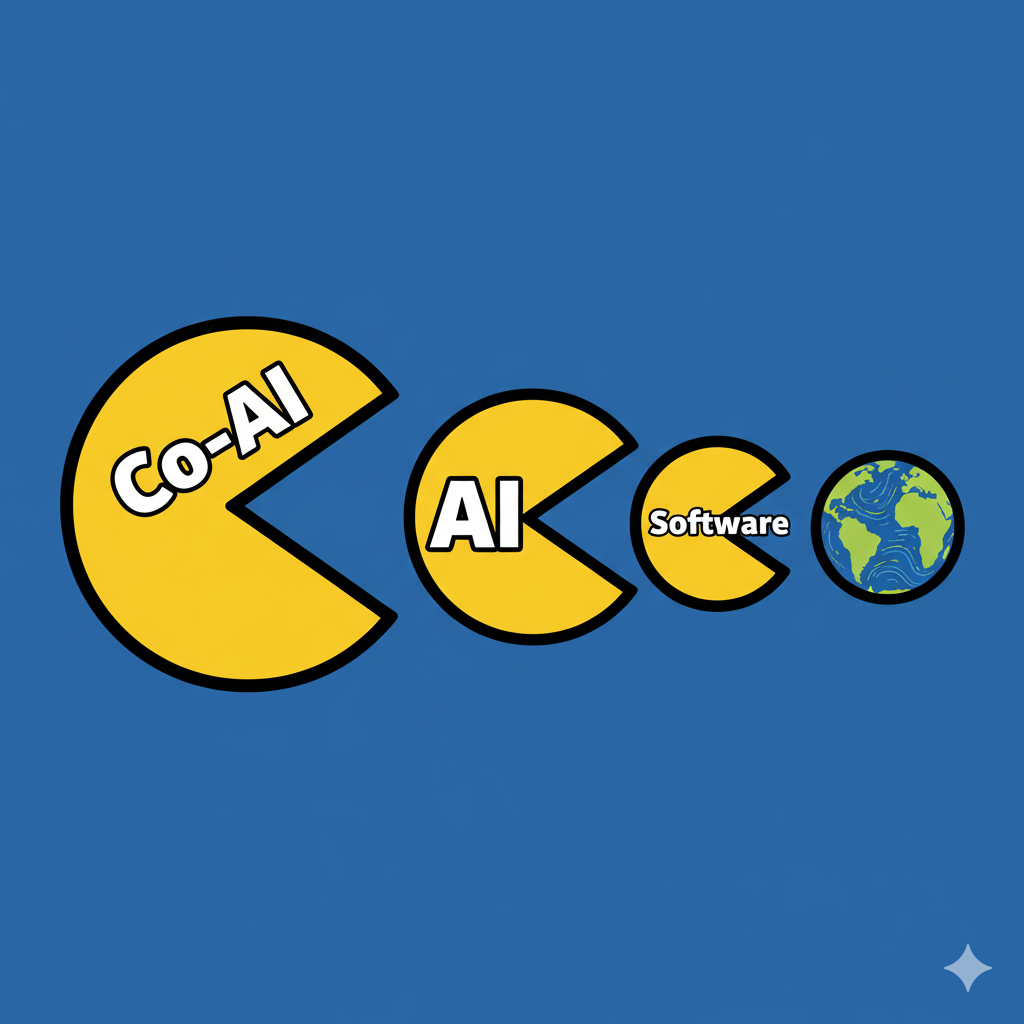

Most agreed that it is a bubble: exaggerated expectations of AI are proving to be premature. Innovation and transformation take time – and money! A ChatGPT subscription is not sufficient for disruption. What became clear in many presentations and discussions during the two days of the conference is: tooling is the first and easy step. Then comes the hard work: training, tailoring, and testing.

What the nearly 50 case studies, deep dives, and keynotes also revealed: AI is so much more than generative AI. Not every problem requires an LLM as a solution. The trend this year was very clear: keep it simple, stupid. If simple linear regression solves the problem, then that’s the solution (for example, for the new Schufa score). Period.

If an LLM delivers better performance, for example as a recommendation engine for newsletters as at DIE ZEIT, then the LLM is the right tool for the job. Deciding which tool is the right solution requires a great deal of experience: Prof. Dr. Sven Crone brought 20 years of forecasting experience to the stage in his remarkable keynote speech “What has AI Ever Done for Forecasting? Anecdotes and Case Studies from the Past Two Decades.” His disillusioning conclusion: it often still fails due to a lack of data.

Another striking observation: LLM was used mainly for internal use cases. Because the greatest expense with LLM is operations: LLMOps (input, model, and output monitoring) often accounted for the main costs. With internal solutions, the risk of prompt injections is lower, and there is always a HITL, i.e., a employee in the loop.

Right at the start of the conference, the keynote “From Assistants to Agents: Building AI That Acts, Not Just Answers” by Farah Ayadi (Principal Product Manager at feedly), showed that the key to AI success is not the system prompt but the system design. Even with smart prompt engineering, a single LLM remains nothing more than a stochastic parrot. Intelligence is created through intelligent context engineering and agentic design. The decisive factors are information (context) and functions (tools) and how the agents communicate and collaborate with each other to achieve a specific goal.

Conclusion of the conference: there was no sign of winter blues. On the contrary: experimentation is over, now it’s about exploitation. Yes, winter is coming, both seasonally and economically, but AI is just beginning to blossom.

Responses